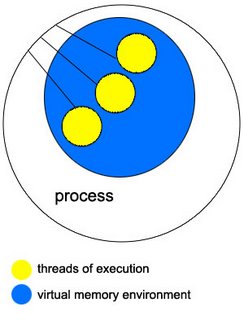

Before we go into how to install lsof, let's discuss a bit about how lsof works. Lsof works by reading kernel's proc structure information to obtain information about open UNIX dialect files. Lsof relates this information to the user structure. It then reads the open file structures that are normally stored in the user structure. From the 00PORTING file distributed with lsof:

Lsof stores information from the proc and user structures in an

internal, local proc structure table. It then processes the open

file structures by reading the file system nodes that lie behind

them, extracting and storing relevant data in internal local file

structures that are linked to the internal local process structure.

Once all data has been gathered, lsof reports it from its internal,

local tables.

Installing lsof

lsof installs itself in /usr/local

-bash-3.00$ cd /home/fmashraqi/install/

-sparc-local.gzt ftp://ftp.sunfreeware.com/pub/freeware/sparc/10/lsof-4.77-sol10

--23:04:59-- ftp://ftp.sunfreeware.com/pub/freeware/sparc/10/lsof-4.77-sol10-sparc-local.gz

=> `lsof-4.77-sol10-sparc-local.gz'

Resolving ftp.sunfreeware.com... 66.193.208.66

Connecting to ftp.sunfreeware.com[66.193.208.66]:21... connected.

Logging in as anonymous ... Logged in!

==> SYST ... done. ==> PWD ... done.

==> TYPE I ... done. ==> CWD /pub/freeware/sparc/10 ... done.

==> PORT ... done. ==> RETR lsof-4.77-sol10-sparc-local.gz ... done.

Length: 475,821 (unauthoritative)

100%[=================================================================================================================>] 475,821 1.02M/s

23:05:00 (1.01 MB/s) - `lsof-4.77-sol10-sparc-local.gz' saved [475821]

[root@db:/home/fmashraqi/install] ls

SUNWhea lsof-4.77-sol10-sparc-local.gz

bench sysbench

gcc test.c

innotop

[root@db:/home/fmashraqi/install] gunzip lsof-4.77-sol10-sparc-local.gz

[root@db31:/home/fmashraqi/install] pkgadd -d lsof-4.77-sol10-sparc-local

The following packages are available:

1 SMClsof lsof

(sparc) 4.77

Select package(s) you wish to process (or 'all' to process

all packages). (default: all) [?,??,q]: y

ERROR: Entry does not match available menu selection. Enter the number

of the menu item you wish to select, or the token which is associated

with the menu item, or a partial string which uniquely identifies the

token for the menu item. Enter ?? to reprint the menu.

Select package(s) you wish to process (or 'all' to process

all packages). (default: all) [?,??,q]: 1

Processing package instancefrom

lsof(sparc) 4.77

Vic Abell

Using as the package base directory.

## Processing package information.

## Processing system information.

5 package pathnames are already properly installed.

## Verifying disk space requirements.

## Checking for conflicts with packages already installed.

## Checking for setuid/setgid programs.

The following files are being installed with setuid and/or setgid

permissions:

/usr/local/bin/lsof

/usr/local/bin/sparcv7/lsof

/usr/local/bin/sparcv9/lsof

Do you want to install these as setuid/setgid files [y,n,?,q] y

Installing lsof as

## Installing part 1 of 1.

/usr/local/bin/lsof

/usr/local/bin/sparcv7/lsof

/usr/local/bin/sparcv9/lsof

/usr/local/doc/lsof/00.README.FIRST

/usr/local/doc/lsof/00CREDITS

/usr/local/doc/lsof/00DCACHE

/usr/local/doc/lsof/00DIALECTS

/usr/local/doc/lsof/00DIST

/usr/local/doc/lsof/00FAQ

/usr/local/doc/lsof/00LSOF-L

/usr/local/doc/lsof/00MANIFEST

/usr/local/doc/lsof/00PORTING

/usr/local/doc/lsof/00QUICKSTART

/usr/local/doc/lsof/00README

/usr/local/doc/lsof/00TEST

/usr/local/doc/lsof/00XCONFIG

/usr/local/doc/lsof/lsof.man

/usr/local/man/man8/lsof.8

[ verifying class]

Installation ofwas successful.

See, Solaris isn't so bad :)

Now let's test whether it runs nicely.

[root@db:/home/fmashraqi/install] lsof | more

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

sched 0 root cwd VDIR 32,96 1024 2 /

init 1 root cwd VDIR 32,96 1024 2 /

init 1 root txt VREG 32,96 49016 5650 /sbin/init

init 1 root txt VREG 32,96 51084 3624 /lib/libuutil.so.1

init 1 root txt VREG 32,96 20572 3587 /lib/libdoor.so.1

init 1 root txt VREG 32,96 142996 3614 /lib/libscf.so.1

init 1 root txt VREG 32,96 5436 6795 /platform/SUNW,Sun-Fire-V440/lib/../../sun4u-us3/lib/libc_psr.so.1

init 1 root txt VREG 32,96 845916 3602 /lib/libnsl.so.1

...

We can get lsof to only display the files that are opened by mysqld process.

[root@db:/home/fmashraqi/install] ps -ef | grep mysqld

mysql 26297 26275 0 Dec 29 ? 8263:23 /usr/local/mysql/bin/mysqld --defaults-file=/opt/db/mysql/product/production/et

root 11143 10642 0 23:44:13 pts/18 0:00 grep mysqld

root 26275 1 0 Dec 29 ? 0:00 /bin/sh /opt/bin/mysqld_safe --defaults-file=/opt/d

Once we have the PID, we can use the -p option to filter by PID.

[root@db:/] lsof -p 26297 | more

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

mysqld 26297 mysql cwd VDIR 118,102 6656 7852 /opt/db/mysql/data

mysqld 26297 mysql txt VREG 118,102 6342120 7831 /opt/db/mysql/mysqld

mysqld 26297 mysql 0r VCHR 13,2 6815752 /devices/pseudo/mm@0:null

mysqld 26297 mysql 1w VREG 118,102 24343140 7853 /opt/db/mysql/data/db.err

mysqld 26297 mysql 2w VREG 118,102 24343140 7853 /opt/db/mysql/data/db.err

mysqld 26297 mysql 3u IPv4 0x3000dc9ccc0 0t0 TCP *:3306 (LISTEN)

mysqld 26297 mysql 4r DOOR 0t0 48 /var/run/name_service_door (door to nscd[13895]) (FA:->0x60007e42ac0)

mysqld 26297 mysql 5u unix 105,815 0t0 55050244 /devices/pseudo/tl@0:ticots->/tmp/mysql.sock (0x6000d970e60) (Vnode=0x3001b8ddc40)

mysqld 26297 mysql 6w VREG 118,102 132755952 7561 /opt/db/mysql/data/db-slow.log

mysqld 26297 mysql 7w VREG 118,102 1070493244 8904 /opt/db/mysql/data/db2-bin.003663

mysqld 26297 mysql 8u VREG 118,102 1938 7617 /opt/db/mysql/data/db-bin.index

mysqld 26297 mysql 9uW VREG 118,102 6627000320 7703 /opt/db/mysql/data/db-InnoDB

mysqld 26297 mysql 10u VREG 118,102 128886 7658 /opt/db/mysql/product (/dev/dsk/c0t0d0s6)

mysqld 26297 mysql 11u VREG 118,102 1668 7677 /opt/db/mysql/product (/dev/dsk/c0t0d0s6)

mysqld 26297 mysql 12uW VREG 118,102 277872640 7704 /opt/db/mysql/data/ib_logfile0

mysqld 26297 mysql 13uW VREG 118,102 277872640 7705 /opt/db/mysql/data/ib_logfile1

mysqld 26297 mysql 14u VREG 118,102 28 7683 /opt/db/mysql/data/master.info

mysqld 26297 mysql 15u VREG 118,102 0 7679 /opt/db/mysql/product (/dev/dsk/c0t0d0s6)

mysqld 26297 mysql 16u VREG 118,102 4 7738 /opt/db/mysql/data/db-relay-bin.000002

mysqld 26297 mysql 17u VREG 118,102 46 7718 /opt/db/mysql/data/db-relay-bin.index

mysqld 26297 mysql 18u VREG 118,102 28 7721 /opt/db/mysql/data/relay-log.info

mysqld 26297 mysql 19r VREG 118,102 4 7716 /opt/db/mysql/data/db-relay-bin.000001

mysqld 26297 mysql 20u IPv4 0x60003395380 0x6ff2c0a TCP db.domain.net:3306->st4.domain.net:50189 (ESTABLISHED)

mysqld 26297 mysql 21u VREG 118,102 35197 8810 /opt/db/mysql/product (/dev/dsk/c0t0d0s6)

--More--

If we don't want to have to search for the PID, we can simply specify the process name with the -c option:

[root@db:/] lsof -c mysqld

To get a count of how many LWPs have a given file open, try something like the following:

[root@db:/] lsof -p 26297 > /home/fmashraqi/logs/db2.lsof.1536; cat /home/fmashraqi/logs/db2.lsof.1536 | awk '{print $9}' | awk 'BEGIN {FS=":"} {print $1}' | sort | uniq | while read i; do num=` grep "$i" /home/fmashraqi/logs/db2.lsof.1536 | wc -l`; echo "$num $i"; done | moreAfter lsof has been installed, it is very worthwhile to read the 00.README.FIRST file in the /usr/local/doc/lsof.

Although I haven't, remember that it is highly recommended that you build your own lsof instead of using a pre-built binary.

Who is using a given file?

lsof can tell us about who is using a specified file

[root@db:/] lsof /opt/db/mysql/data/db1/subscribers.MYD | more

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

mysqld 26297 mysql 45u VREG 118,102 1176704 7646 /opt/db/mysql/data/db1/subscribers.MYD

mysqld 26297 mysql 48u VREG 118,102 1176704 7646 /opt/db/mysql/data/db1/subscribers.MYD

mysqld 26297 mysql 82u VREG 118,102 1176704 7646 /opt/db/mysql/data/db1/subscribers.MYD

mysqld 26297 mysql 94u VREG 118,102 1176704 7646 /opt/db/mysql/data/db1/subscribers.MYD

mysqld 26297 mysql 255u VREG 118,102 1176704 7646 /opt/db/mysql/data/db1/subscribers.MYD

mysqld 26297 mysql 321u VREG 118,102 1176704 7646 /opt/db/mysql/data/db1/subscribers.MYD

mysqld 26297 mysql 420u VREG 118,102 1176704 7646 /opt/db/mysql/data/db1/subscribers.MYD

To get the total count

[root@db:/] lsof /opt/db/mysql/data/db1/subscribers.MYD | wc -l

784

To view all the network socket files open on a machine, supply the -i option.

[root@db:/] lsof -i | more

...

mysqld 26297 mysql 2991u IPv4 0x60007853400 0t12414219 TCP db.domain.net:3306->client.domain.net:40252 (ESTABLISHED)

...

For sshd connections a connection of IPv6 type, similar to the one below, may be noticed in lsof -i output:

sshd 23895 root 4u IPv6 0x300153f4200 0t19040 TCP db.domain.net:ssh->client.domain.net:61885 (ESTABLISHED)

If you would like to filter the open network connections output by specifying a host, you can do that:

[root@db:/] lsof -i@client.domain.net

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

mysqld 26297 mysql 30u IPv4 0x6000b106200 0t5170868 TCP db.domain.net:3306->client.domain.net:44608 (ESTABLISHED)

Similarly, we can further filter by specifying the protocol in use

[root@db:/] lsof -iTCP@client.domain.com

# or

[root@db:/] lsof -iTCP@client.domain.com:40259

To view network connections of type IPv4 or IPv6

[root@db:/] lsof -i4

[root@db:/] lsof -i6

Another cool thing you can do is to match netstat output with lsof. First find the remote address in netstat output that you are interested in matching. For instance:

[root@db:/]netstat

db.3306 my.46726 66608 0 49232 0 CLOSE_WAIT

Now specify the remote address as a parameter to lsof option -i:

[root@db:/] lsof -i TCP@my:46726

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

mysqld 26297 mysql 627u IPv4 0x60003840040 0t304 TCP db.domain.net:3306->my.domain.net:46726 (CLOSE_WAIT)

If we want to see the connections opened by the mysql user we can use the -u option:

[root@db:/] lsof -umysql

To see connections opened by both the mysql user and the root user:

[root@db:/] lsof -umysql,root

We can also see connections opened by all users except mysql

[root@db:/] lsof -u ^root

To tell lsof not to lookup hostnames

lsof -n

Check out the lsof FAQ page.